Example Workflows

The following example workflows are provided to simplify getting started with Flux. These workflows are easily modified and extended to meet your requirements.

Action prescripts and postscripts are used in these flows to provide status messaging and details of the file name being moved. See the example prescript here of updating the status message in the Operations Console during a file copy:

Prescript

flowContext.setStatus("Copying: " + flowContext.get("file"));

Bulk File Copy (Basic)

This workflow scans a directory and copies all the found files. The bulk file copy is useful where all the files have to be copied at a point in time. If this bulk file copy fails, rerunning the flow will copy all the files over again.

This example assumes that a runtime configuration file has been defined, and that runtime configuration properties to identify the file source directory and target directory specified as follows:

Required Runtime Configuration File Properties

/source=c:/in/*.*

/target=c:/out

Download here: Bulk-Copy.ffc

Mass File Copy and Prepend Today’s Date to Each Copied File (Basic)

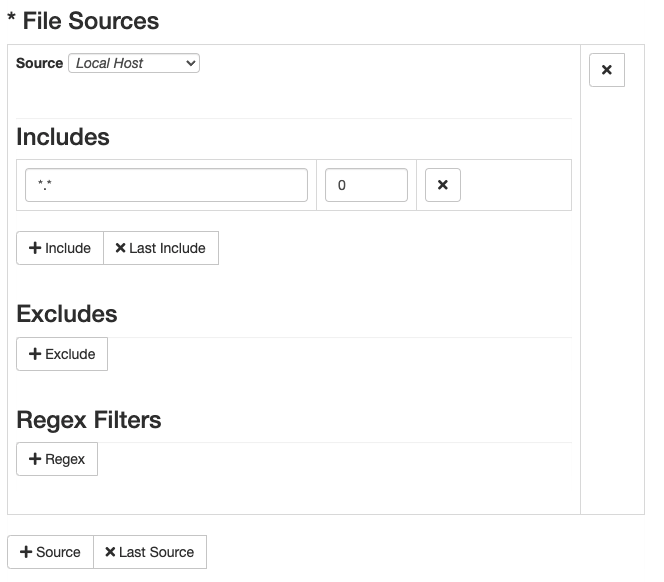

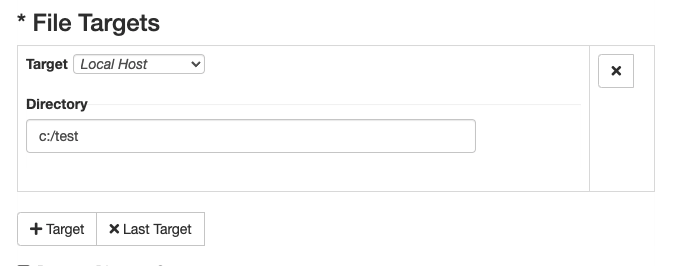

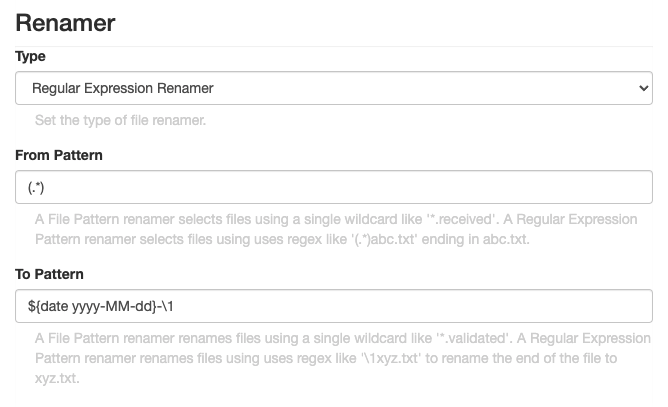

This workflow scans a directory and copies all the found files. The workflow consists of just a single File Copy Action. A Regular Expression Renamer is configured to prepend the date to each copied file.

Note in the illustration below that the From Pattern is (.*). This regular expression captures the file name into a group, later referenced in the To Pattern as \1 (Since it is the first and only regex group captured).

The To Pattern is ${$date yyyy-MM-dd}-\1. This pattern prepends the date in a form such as 2022-Jun-18, with a dash ‘-‘, in front of each file name copied from the current directory into c:\test.

Download here: MassFileCopyWithRegexToPrependDate.ffc

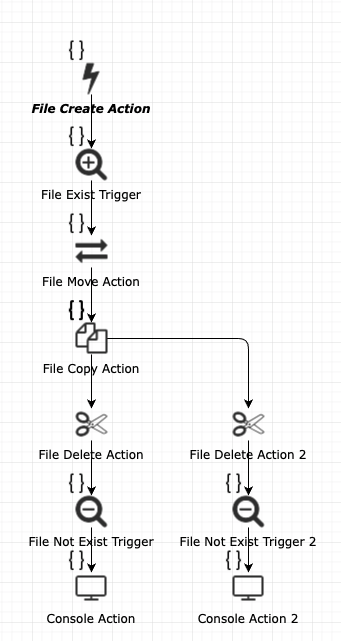

Generic File Move, Copy, Rename, Delete (Basic)

This workflow creates a file, tests for its existence, moves it somewhere, copies it back to where it was moved from, renames it, and deletes both files and tests to make sure they both no longer exist.

This example assumes that a runtime configuration file has been defined, and that runtime configuration properties to identify the file source directory and target directory specified as follows:

Required Runtime Configuration File Properties

/new_file=myFile.txt

/rename_file=yourFile.txt

/dir1=c:\NEW

/dir2=c:\MOVE

Download here: FLUX-SAMPLES-FileActions.ffc

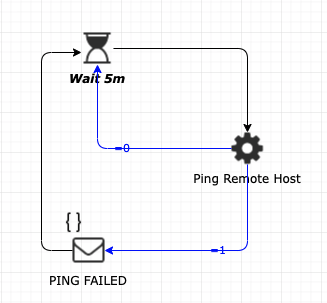

Ping a Host and Notify if Down (Basic)

This workflow sends a ping request to a host and an email notification if the host is down.

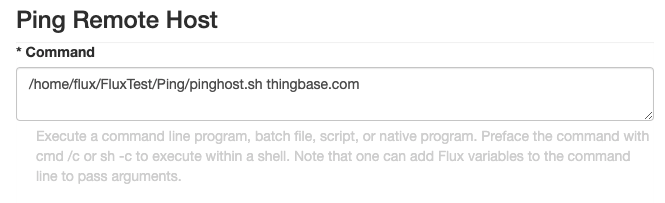

This example assumes that a shell script has been defined in a directory located at /home/flux/FluxTest/Ping. Modify the Process Action to point to a directory of your choosing or enter the full path to the script or executable in the Command text field. Paths can be relative to the directory was Flux was installed or an absolute path can be used (as in this example).

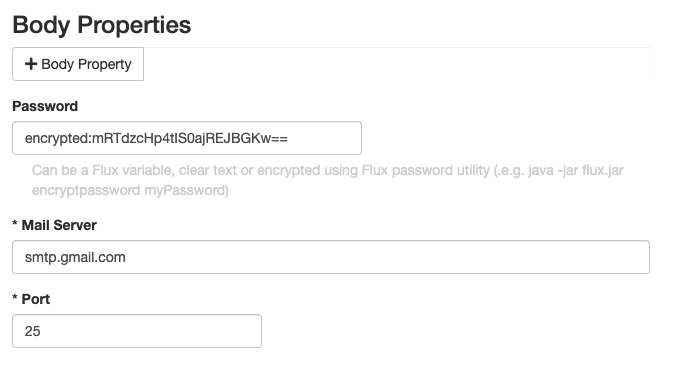

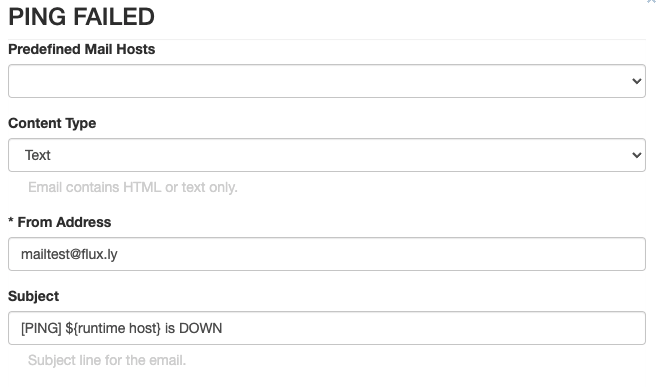

You will also need to modify the Mail Action to input the correct mail server settings like so:

Required Shell Script for Linux/Unix

host=$1

for myHost in $host

do

ping=$(ping -c 1 $myHost | grep 'received' | awk -F',' '{ print $2 }' | awk '{ print $1 }')

if [ $ping -eq 0 ]; then

exit 1

else

exit 0

fi

done

Required Batch File for Windows

@echo off

ping -n 1 %1

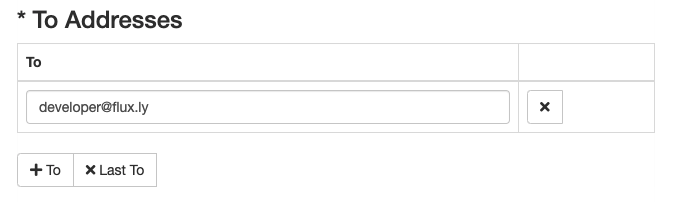

Note: You’ll need to check that your antivirus software doesn’t have any rules blocking SMTP. For example, McAfee Enterprise Virus protection - standard for many corporations - has a default setting that blocks SMTP ports 25 and 587. The default rule needs to be modified to add java.exe and telnet.exe as programs allowed to use the mail ports. This is illustrated in the attached screenshot.

Download here: FLUX-SAMPLES-Ping.ffc

Mail Trigger that prints to STDOUT the Subject and Body of the email (Basic)

This workflow polls the ‘Inbox’ of a test mail user and fires when new mail arrives; it then prints out to STDOUT the email subject and the email body. Export the workflow to the engine and then send an email to ‘fluxtemplate@gmail.com’ (without quotes) and check the Console Action’s output match the subject and body.

Download here: FLUX-SAMPLES-MailTrigger.ffc

Flow Chart Trigger that waits for another workflow to finish copying files and then iterates through them and prints to STDOUT (Basic)

This workflow waits on a Flow Chart trigger until the workflow defined in the ‘Namespace’ property finishes and then polls a folder and iterates through the list of files found. The ‘Namespace’ can also be used to define entire namespaces, specific workflows, etc. as explained in Flow Chart Trigger.

You will need to edit the File Exist trigger to point to a valid directory in your system.

This example waits for the /Incoming/Daily workflow attached to finish. You will need to change the ‘Source’ and ‘Target’ of the File Copy action to valid directories in your system.

Download here: Daily-FlowChartTrigger.ffc; Incoming-Daily.ffc

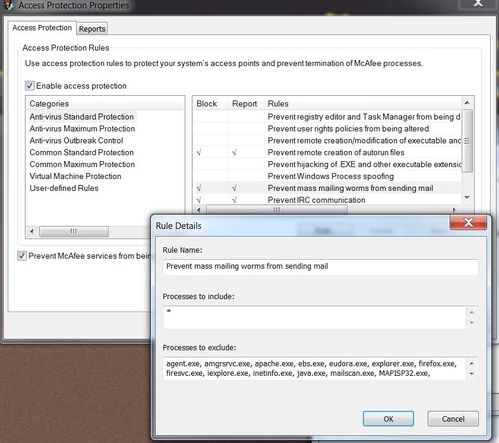

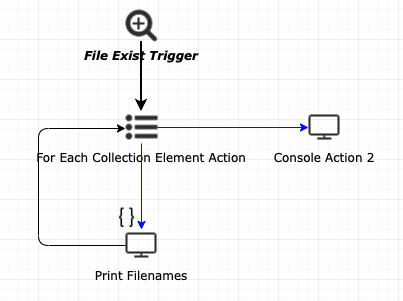

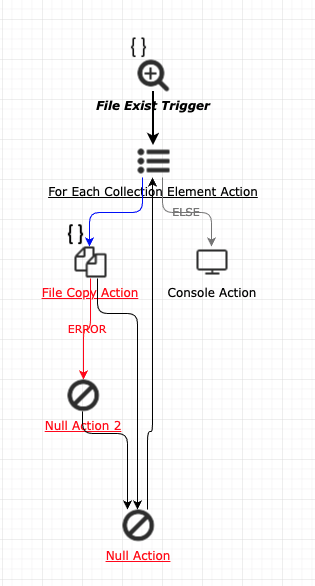

Using a For Each Collection Element action to iterate through a collection (Basic)

This workflow scans a folder for files, iterates through them, and prints out the filenames one at a time.

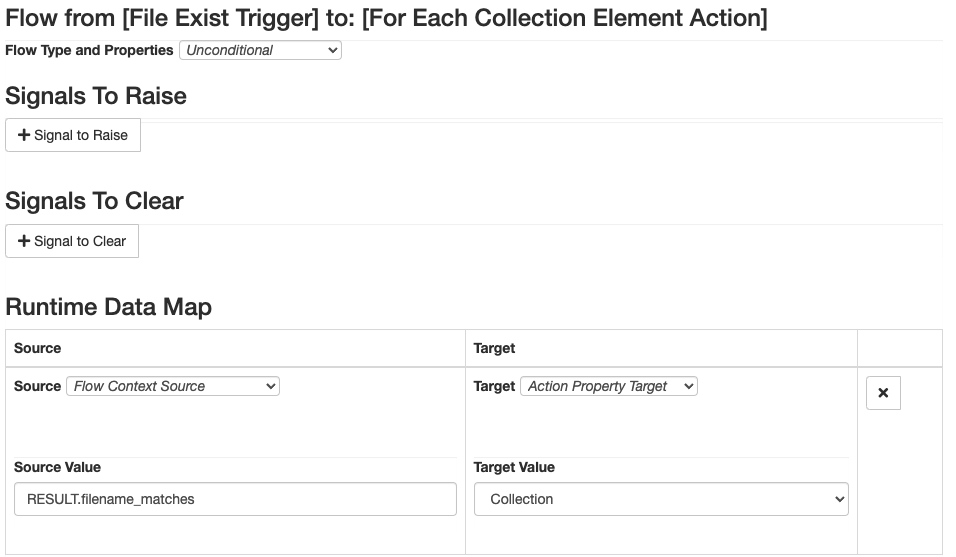

It uses a File Exist trigger to poll for files, then Runtime Data Mapping to pass the resulting collection to a For Each Collection Element action like so:

We’re calling ${i} from the ‘Print Filenames’ Console action, ‘i’ is the Loop Index of the For Each Collection Element action, and we can use Variable Substitution to call that variable from anywhere in the workflow (keep in mind that until the For Each Collection Element action runs, that variable is empty).

You will need to modify the File Exist trigger’s source folder to a valid path in your environment.

Download here: FLUX-SAMPLES-IterateFiles.ffc

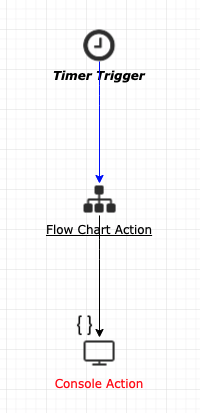

Calling a workflow using a FlowChart action (Basic)

This workflow exports a workflow that’s in the Workflow Repository under a new name, waits for it to finish, and then continues executing.

To run this example you’ll need to download both workflows and upload them to your Repository. Then you can start the /FLUX-SAMPLES/FlowChartAction workflow which will, in turn, start the child workflow.

Download here: FLUX-SAMPLES-FlowChartAction.ffc; FLUX-SAMPLES-ChildWorkflow.ffc

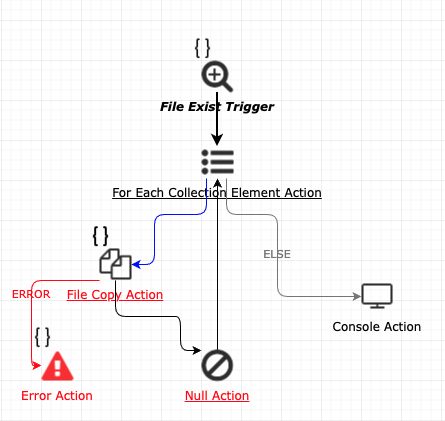

File Copy that Fails and Exits on Error (Intermediate)

This workflow scans a directory and copies a file at a time. If a file copy fails, the workflow is set to ‘FAILED’ in the Operations Console. From the console, you can either ‘restart’ the workflow to run from the beginning, or ‘recover’ the workflow which starts the flow at the point where the file copy failed.

This example assumes that a runtime configuration file has been defined, and that runtime configuration properties to identify the file source directory and target directory specified as follows:

Required Runtime Configuration File Properties

/source=c:/in/*.*

/target=c:/out

Download here: FLUX-SAMPLES_FileCopy-ExitOnError.ffc

File Copy that Continues on Error (Intermediate)

This workflow scans a directory and copies a file at a time and logs a notification in the event of a file copy failure. The workflow then continues to process the next files.

This example assumes that a runtime configuration file has been defined, and that runtime configuration properties to identify the file source directory and target directory specified as follows:

Required Runtime Configuration File Properties

/source=c:/in/*.*

/target=c:/out

Download here: FLUX-SAMPLES_FileCopy-ContinueOnError.ffc

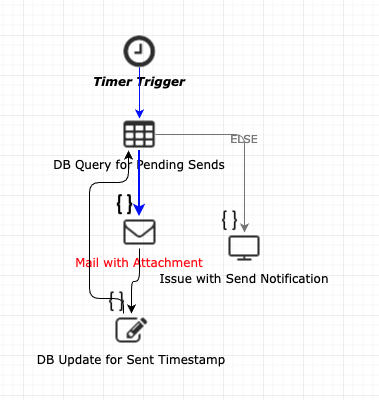

Report or Data Delivery (Intermediate)

This Flux workflow starts with a simple database table that contains the email address, file name, and a timestamp of when the file was sent (initially an empty field). Every five minutes a timer trigger fires, triggering a database query to search the database for records where the sent timestamp is empty. For each record found, a Flux mail action builds an email using a customizable email template and attaches the file specified in the record. The mail is sent, and the time that the mail was sent is updated in the database record. In the event of a mail failure or a file not found issue, a notification is sent.

This example assumes that a database table named ‘Customers’ has been defined with an email address, file path (to the file that needs to be delivered), and timestamp field (all defined as character or varchar fields).

Download here: FLUX-SAMPLES-DB+Email.ffc

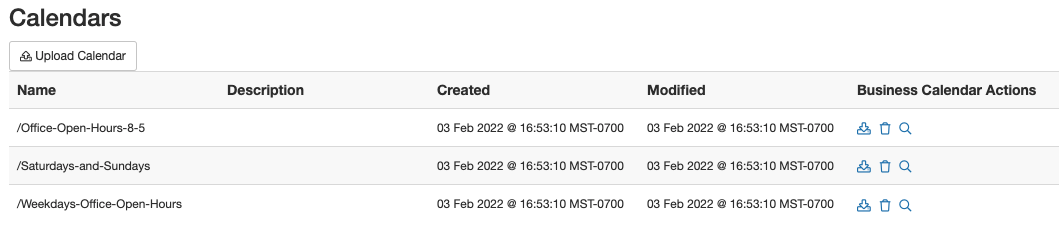

Adding and Using a Business Interval (Intermediate - Requires Scripting)

The following script code creates a business interval for use in restricting workflows to only run within specified times and dates.

Source for Creating and Saving a Business Interval Expand source Create a workflow and then add a Null Action to the workflow. Take the above code and paste it into the Prescript for the Null Action and then export the workflow to the engine. Flux will run the above script and create the business intervals/calendars defined and add them into the Flux Repository, as illustrated below:

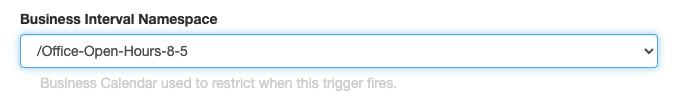

After this business interval is loaded to the repository, it can be used in time expressions throughout Flux. You can use this Business Interval in any Timer Trigger as shown below:

If you have a Timer Trigger where you would like to apply your business interval, you will need to include some special characters in your time expression. These characters are:

- b

- h

In a Cron-style time expression, the “b” character indicates all values for the given column that are included in the business interval, while the “h” character indicates all values that are excluded. For example, the Cron-style expression 0 0 0 *b indicates “fire at the top of the hour for every hour value included in the interval”.

All the information along with more usages of Cron expressions and business intervals can be found in Business Intervals.

Download the above workflow with the Null Action already preconfigured with the Prescript here: FLUX-SAMPLES-Create-Business-Calendars.ffc

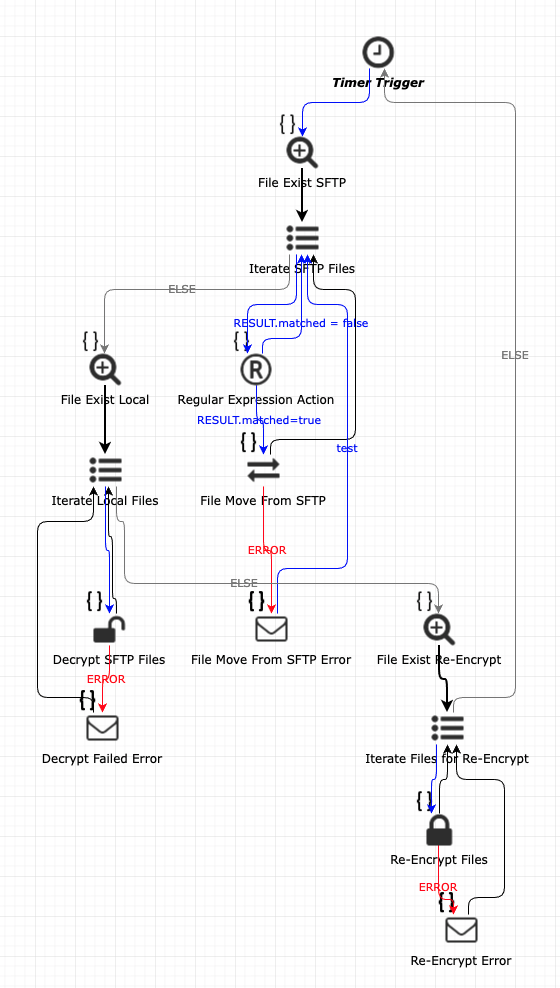

SFTP File Transfer Workflow (Advanced)

A common pattern encountered by workflow designers involves a workflow that fires at a given time every weekday, then polls a remote server for files which are fetched and processed. Often such workflows need to support selecting files for processing based on a naming convention – such as a file description followed by a valid date in the filename. A best practice in Flux workflow design is accommodating for exceptional situations during processing. Often customers utilize wild card processing to select and process sets of files, not realizing that if a file in the set fails to correctly process, all following files in the set will fail to process.

The workflow below starts with a timer trigger to fire at specified intervals. A File Exists Trigger fires collecting the set of filenames available to fetch from the remote server. Then, using a For Each Collection Element Action that submits each filename to a Regular Expression Action to compare a substring of the file to match a valid date (in yyyy-MM-dd format). If a filename matches the regular expression, the filename is submitted to a File Move Action where the file itself is moved to a local folder to be decrypted. If a filename does not match the regular expression, then the flow goes back to the For Each Collection Action to pick up the next filename in line.

After fetching the remote files, the workflow uses another file trigger to pick up the filenames of local files to be decrypted. This pattern of using a For Each Collection Element Action provides per-file processing, meaning (in this case) if the decryption action fails, the Flux Operations Console has access to the filename of the file that failed (rather than a list of files and no knowledge of which file failed to decrypt). This same pattern is used again to re-encrypt the files.

If anything should fail in the workflow, mail actions send an email to a set of specified email addresses to alert the customer’s team that something failed. After the email has been sent (containing the filenames which failed) the normal processing of the remaining files continues.

Runtime Configuration

Directories, server names, and numerous other elements of this workflow are externalized to runtime configuration properties allowing the same workflow to be used as a template for many purposes, providing consistency of deployment. This example assumes that a runtime configuration file has been defined as follows:

Runtime Configuration Properties Expand source

Download Here: FLUX-SAMPLES-MFT-Example.ffc

Monitor Deadlines and SLAs (Advanced)

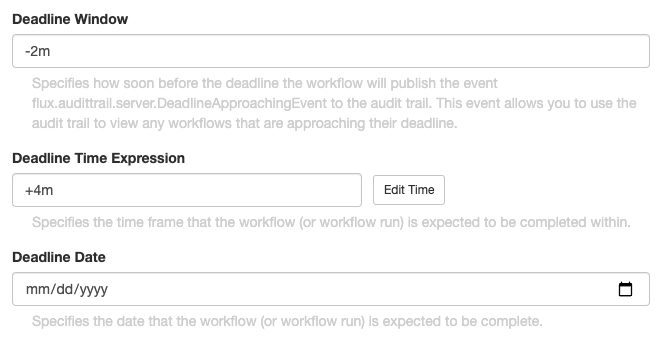

A very common scenario is the need to meet SLAs (or Service Level Agreements). Flux offers a robust way of dealing with SLAs: you can set deadlines on individual workflows (as shown below), so that if a workflow exceeds its deadline, it will publish an audit trail event indicating that the deadline has passed. The Operations Console will also graphically indicate any workflows that are approaching (or have exceeded) their deadlines.

The Deadline Time expression species the time frame that the workflow (or workflow run) is expected to be completed in. For example, a deadline time expression of “+7m” means the workflow (or each run in the workflow) is expected to finish within seven minutes.

The Deadline Window specifies how soon before the deadline the engine will publish the event flux.audittrail.server.DeadlineApproachingEvent to the audit trail. This event allows you to use the audit trail to view any workflows that are approaching their deadline. For example, a time expression of “-7m” would mean “seven minutes before the date and time of the deadline.

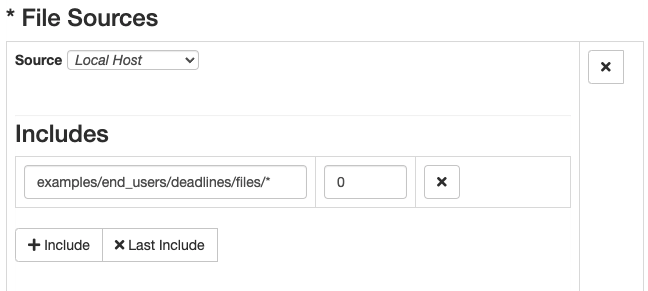

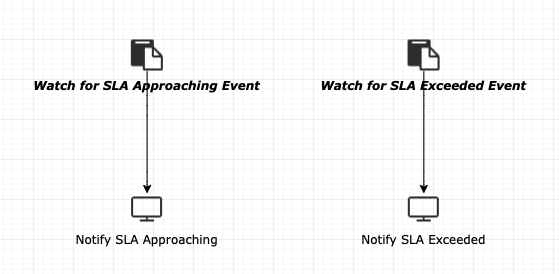

In this example, we have a pair of workflows – one that polls for files, and another in charge of monitoring the SLAs of the first one.

The first workflow is watching a folder for files, we’ve pointed it to a folder that contains no files for the sake of the example: we want the workflow to exceed the deadline.

The second workflow is watching for the Audit Trail events flux.audittrail.server.DeadlineApproachingEvent and flux.audittrail.server.DeadlineExceededEvent. It will print a message when each of those messages are published to the Audit Trail.

Download here: FileWatcher with deadline.ffc; SLA Monitor.ffc

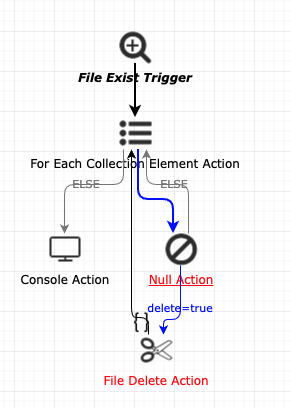

Delete Files Older than 15 Days (Advanced)

This workflow polls a folder for files, calculates how long it’s been since they were last updated/modified, and deletes all files that have a modification date of 15 or more days ago.

It uses Runtime Data Mapping to pass on the File Exist trigger’s RESULT.fileinfo_matches to a Collection and to select the lastModified and url of each file to flow context variables to be used in the Null Action. The Null Action has a script in place that takes care of calculating how many days have passed since each file was modified, like so:

import java.util.date;

Date d1 = flowContext.get("lastModified");

Date d2 = new Date();

long diff = d2.getTime() - d1.getTime();

long diffDays = diff / (24 * 60 * 60 * 1000);

System.out.print(diffDays + " days, ");

if (diffDays >= 15) {

flowContext.put("delete","true");

}

Then, if 15 or more days have passed since the file was modified, the workflow flows into a File Delete action, which deletes the file and flow back to the For Each Collection Element action to process the next file in line.

Download here: FLUX-SAMPLES-RemoveOldFiles.ffc